Hello,

My server pure services aren’t running. Using the local IP address in the browser I can see this error info:

Docker ErrorError: creating named volume "d22f2c60f2cab1ff3318f6da8ddbdecb60cb311d6f245b037fe4c84448193975": allocating lock for new volume: allocation failed; exceeded num_locks (2048)

Please contact support.

Clicking into the logs gives this detail:

024-10-20T07:27:56-05:00 2024-10-20T12:27:56.390753Z INFO inner_main:setup_or_init:init: startos::init: Mounted Logs

2024-10-20T07:27:56-05:00 2024-10-20T12:27:56.436905Z INFO inner_main:setup_or_init:init:bind: startos::disk::mount::util: Binding /embassy-data/package-data/tmp/var to /var/tmp

2024-10-20T07:27:56-05:00 2024-10-20T12:27:56.439796Z INFO inner_main:setup_or_init:init:bind: startos::disk::mount::util: Binding /embassy-data/package-data/tmp/podman to /var/lib/containers

2024-10-20T07:27:56-05:00 2024-10-20T12:27:56.442126Z INFO inner_main:setup_or_init:init: startos::init: Mounted Docker Data

2024-10-20T07:27:58-05:00 2024-10-20T12:27:58.206995Z INFO inner_main:setup_or_init:init: startos::init: Enabling Docker QEMU Emulation

2024-10-20T07:27:58-05:00 2024-10-20T12:27:58.283723Z ERROR inner_main: startos::bins::start_init: Error: creating named volume "d22f2c60f2cab1ff3318f6da8ddbdecb60cb311d6f245b037fe4c84448193975": allocating lock for new volume: allocation failed; exceeded num_locks (2048)

2024-10-20T07:27:58-05:00 2024-10-20T12:27:58.283755Z DEBUG inner_main: startos::bins::start_init: Error: creating named volume "d22f2c60f2cab1ff3318f6da8ddbdecb60cb311d6f245b037fe4c84448193975": allocating lock for new volume: allocation failed; exceeded num_locks (2048)

2024-10-20T07:28:01-05:00 2024-10-20T12:28:01.080593Z ERROR inner_main:init: startos::context::diagnostic: Error: Docker Error: Error: creating named volume "d22f2c60f2cab1ff3318f6da8ddbdecb60cb311d6f245b037fe4c84448193975": allocating lock for new volume: allocation failed; exceeded num_locks (2048)

2024-10-20T07:28:01-05:00 : Starting diagnostic UI

2024-10-20T07:28:01-05:00 2024-10-20T12:28:01.080682Z DEBUG inner_main:init: startos::context::diagnostic: Error { source:

2024-10-20T07:28:01-05:00 0: Error: creating named volume "d22f2c60f2cab1ff3318f6da8ddbdecb60cb311d6f245b037fe4c84448193975": allocating lock for new volume: allocation failed; exceeded num_locks (2048)

2024-10-20T07:28:01-05:00 0:

2024-10-20T07:28:01-05:00 Location:

2024-10-20T07:28:01-05:00 startos/src/util/mod.rs:163

2024-10-20T07:28:01-05:00 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ SPANTRACE ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

2024-10-20T07:28:01-05:00 0: startos::init::init

2024-10-20T07:28:01-05:00 at startos/src/init.rs:192

2024-10-20T07:28:01-05:00 1: startos::bins::start_init::setup_or_init

2024-10-20T07:28:01-05:00 at startos/src/bins/start_init.rs:23

2024-10-20T07:28:01-05:00 2: startos::bins::start_init::inner_main

2024-10-20T07:28:01-05:00 at startos/src/bins/start_init.rs:198

2024-10-20T07:28:01-05:00 Backtrace omitted. Run with RUST_BACKTRACE=1 environment variable to display it.

2024-10-20T07:28:01-05:00 Run with RUST_BACKTRACE=full to include source snippets., kind: Docker, revision: None }

Ideas?

You’re not able to start your Docker s. There are a couple of reasons why this might be, one that comes to mind is being out of disk space.

What services are you running? Are you running anything where you’ve been uploading many large files?

Did you complete this initial setup process all the way to the SSH stage? Do you have that set up? (see: Start9 | Using SSH )

Hello, Thanks and sorry it took me so long to get back to this. Yes, I did set it up and I am ssh’d in now as the start9 user.

What next?

In case you need general disk space output, here’s something:

start9@start:~$ df

Filesystem 1K-blocks Used Available Use% Mounted on

udev 16345528 0 16345528 0% /dev

tmpfs 3273840 1096 3272744 1% /run

overlay 16369184 30880 16338304 1% /

/dev/nvme0n1p3 15728640 3309932 11999956 22% /media/embassy/next

tmpfs 16369184 84 16369100 1% /dev/shm

tmpfs 5120 0 5120 0% /run/lock

/dev/nvme0n1p2 1046512 60052 986460 6% /boot

tmpfs 3273836 0 3273836 0% /run/user/1001

/dev/mapper/EMBASSY_BGOCZYMZUGFQHI7JQWBRNNCC5BM6PEL2RKGKJ3VMQC6B2RWLJJXA_main 8372224 1183484 6656068 16% /embassy-data/main

/dev/mapper/EMBASSY_BGOCZYMZUGFQHI7JQWBRNNCC5BM6PEL2RKGKJ3VMQC6B2RWLJJXA_package-data 3881730048 1236262440 2645271256 32% /embassy-data/package-data

tmpfs 3273836 0 3273836 0% /run/user/1000

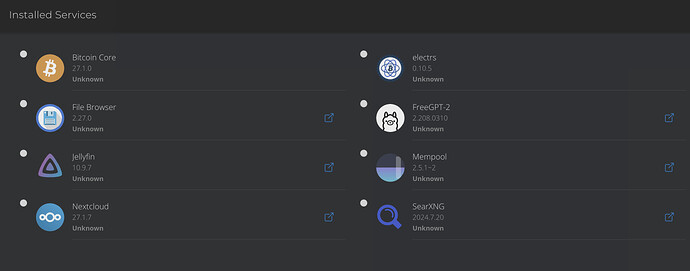

And these are the services I’ve been playing with:

Yes, I’ve added some large files for video serving, 2-4 LLMs for FreeGPT-2, and Bitcoin takes up quite a bit I think.

I did some digging of my own and found this:

opened 03:01PM - 11 May 22 UTC

closed 09:57AM - 18 Jul 22 UTC

kind/bug

stale-issue

locked - please file new issue/PR

<!--

---------------------------------------------------

BUG REPORT INFORMATIO… N

---------------------------------------------------

Use the commands below to provide key information from your environment:

You do NOT have to include this information if this is a FEATURE REQUEST

**NOTE** A large number of issues reported against Podman are often found to already be fixed

in more current versions of the project. Before reporting an issue, please verify the

version you are running with `podman version` and compare it to the latest release

documented on the top of Podman's [README.md](../README.md). If they differ, please

update your version of Podman to the latest possible and retry your command before creating

an issue.

Also, there is a running list of known issues in the [Podman Troubleshooting Guide](https://github.com/containers/podman/blob/main/troubleshooting.md),

please reference that page before opening a new issue.

If you are filing a bug against `podman build`, please instead file a bug

against Buildah (https://github.com/containers/buildah/issues). Podman build

executes Buildah to perform container builds, and as such the Buildah

maintainers are best equipped to handle these bugs.

-->

**Is this a BUG REPORT or FEATURE REQUEST? (leave only one on its own line)**

/kind bug

**Description**

I got this recently.

```shell

# podman run --rm -ti alpine sh

Error: error allocating lock for new container: allocation failed; exceeded num_locks (2048)

```

concurrent to create about < 30 containers, then I got deadlock, all containers can not create now.

here's the containers created after one by one total:

```

# podman ps | wc -l

22

```

I changed `num_locks` limit from 2048 to 4096, but this does not help at all.

```

allocation failed; exceeded num_locks (4096)

```

after changed to `num_locks = 8192`, things seems to work.

I put some debug logging into podman code. so when it try to get a shm lock and success, it print a log with special prefix `xxdebug`.

when it try to get a shm lock and failed, it also print a log with special prefix `xxdebug`.

```diff

diff --git a/libpod/runtime_ctr.go b/libpod/runtime_ctr.go

index df7174ac6..68e9041c7 100644

--- a/libpod/runtime_ctr.go

+++ b/libpod/runtime_ctr.go

@@ -299,7 +299,7 @@ func (r *Runtime) setupContainer(ctx context.Context, ctr *Container) (_ *Contai

}

ctr.lock = lock

ctr.config.LockID = ctr.lock.ID()

- logrus.Debugf("Allocated lock %d for container %s", ctr.lock.ID(), ctr.ID())

+ logrus.Infof("xxdebug Allocated lock %d for container %s name: %s", ctr.lock.ID(), ctr.ID(), ctr.Name())

defer func() {

if retErr != nil {

@@ -774,6 +774,9 @@ func (r *Runtime) removeContainer(ctx context.Context, c *Container, force, remo

} else {

logrus.Errorf("Free container lock: %v", err)

}

+ logrus.Errorf("xxdebug Free container lock failed, %d for container %s name: %s err: %v", c.lock.ID(), c.ID(), c.Name(), err)

+ } else {

+ logrus.Infof("xxdebug Free container lock success, %d for container %s name: %s", c.lock.ID(), c.ID(), c.Name())

}

```

I counted the log items total, it is `68` only, why does it need 8192 num_locks limit ?

<!--

Briefly describe the problem you are having in a few paragraphs.

-->

**Steps to reproduce the issue:**

1.

2.

3.

**Describe the results you received:**

**Describe the results you expected:**

**Additional information you deem important (e.g. issue happens only occasionally):**

**Output of `podman version`:**

```

Client: Podman Engine

Version: 4.2.0-dev

API Version: 4.2.0-dev

Go Version: go1.18.1

Git Commit: c379014ee4e57dc19669ae92f45f8e4c0814295b-dirty

Built: Wed May 11 23:08:15 2022

OS/Arch: linux/amd64

```

**Output of `podman info --debug`:**

```

host:

arch: amd64

buildahVersion: 1.26.1

cgroupControllers:

- cpuset

- cpu

- io

- memory

- hugetlb

- pids

- rdma

- misc

cgroupManager: cgroupfs

cgroupVersion: v2

conmon:

package: /usr/bin/conmon is owned by conmon 1:2.1.0-1

path: /usr/bin/conmon

version: 'conmon version 2.1.0, commit: '

cpuUtilization:

idlePercent: 96.93

systemPercent: 0.94

userPercent: 2.13

cpus: 20

distribution:

distribution: arch

version: unknown

eventLogger: file

hostname: wudeng

idMappings:

gidmap: null

uidmap: null

kernel: 5.17.5-arch1-2

linkmode: dynamic

logDriver: k8s-file

memFree: 14855860224

memTotal: 33387909120

networkBackend: cni

ociRuntime:

name: crun

package: /usr/bin/crun is owned by crun 1.4.5-1

path: /usr/bin/crun

version: |-

crun version 1.4.5

commit: c381048530aa750495cf502ddb7181f2ded5b400

spec: 1.0.0

+SYSTEMD +SELINUX +APPARMOR +CAP +SECCOMP +EBPF +CRIU +YAJL

os: linux

remoteSocket:

exists: true

path: /run/podman/podman.sock

security:

apparmorEnabled: false

capabilities: CAP_CHOWN,CAP_DAC_OVERRIDE,CAP_FOWNER,CAP_FSETID,CAP_KILL,CAP_NET_BIND_SERVICE,CAP_SETFCAP,CAP_SETGID,CAP_SETPCAP,CAP_SETUID,CAP_SYS_CHROOT

rootless: false

seccompEnabled: true

seccompProfilePath: /etc/containers/seccomp.json

selinuxEnabled: false

serviceIsRemote: false

slirp4netns:

executable: /usr/bin/slirp4netns

package: /usr/bin/slirp4netns is owned by slirp4netns 1.2.0-1

version: |-

slirp4netns version 1.2.0

commit: 656041d45cfca7a4176f6b7eed9e4fe6c11e8383

libslirp: 4.7.0

SLIRP_CONFIG_VERSION_MAX: 4

libseccomp: 2.5.4

swapFree: 17179865088

swapTotal: 17179865088

uptime: 23m 10.17s

plugins:

log:

- k8s-file

- none

- passthrough

network:

- bridge

- macvlan

- ipvlan

volume:

- local

registries:

hub.k8s.lan:

Blocked: false

Insecure: true

Location: hub.k8s.lan

MirrorByDigestOnly: false

Mirrors: null

Prefix: hub.k8s.lan

PullFromMirror: ""

search:

- docker.io

store:

configFile: /etc/containers/storage.conf

containerStore:

number: 36

paused: 0

running: 21

stopped: 15

graphDriverName: overlay

graphOptions:

overlay.mountopt: nodev,metacopy=on

graphRoot: /var/lib/containers/storage

graphRootAllocated: 178534772736

graphRootUsed: 136645885952

graphStatus:

Backing Filesystem: extfs

Native Overlay Diff: "false"

Supports d_type: "true"

Using metacopy: "true"

imageCopyTmpDir: /var/tmp

imageStore:

number: 63

runRoot: /run/containers/storage

volumePath: /var/lib/containers/storage/volumes

version:

APIVersion: 4.2.0-dev

Built: 1652281695

BuiltTime: Wed May 11 23:08:15 2022

GitCommit: c379014ee4e57dc19669ae92f45f8e4c0814295b-dirty

GoVersion: go1.18.1

Os: linux

OsArch: linux/amd64

Version: 4.2.0-dev

```

**Package info (e.g. output of `rpm -q podman` or `apt list podman`):**

```

build from main branch

```

**Have you tested with the latest version of Podman and have you checked the Podman Troubleshooting Guide? (https://github.com/containers/podman/blob/main/troubleshooting.md)**

Yes

**Additional environment details (AWS, VirtualBox, physical, etc.):**

I use nomad podman driver to create containers, which maybe concurrent request libpod rest api.

I gathered some information without doing anything destructive:

start9@start:~$ sudo podman version

Client: Podman Engine

Version: 4.5.1

API Version: 4.5.1

Go Version: go1.19.8

Built: Thu Jan 1 00:00:00 1970

OS/Arch: linux/amd64

start9@start:~$ sudo podman ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

34b4519aab14 docker.io/start9/filebrowser/main:2.27.0 4 weeks ago Created filebrowser.embassy

0b8a6a26f2ae docker.io/start9/nextcloud/main:27.1.7 4 weeks ago Created nextcloud.embassy

76e1f264ba97 docker.io/start9/searxng/main:2024.7.20 4 weeks ago Created searxng.embassy

1305795a2877 docker.io/start9/bitcoind/main:27.1.0 4 weeks ago Created bitcoind.embassy

84cbb7301d01 docker.io/start9/jellyfin/main:10.9.7 4 weeks ago Created jellyfin.embassy

283089c5c454 docker.io/start9/mempool/main:2.5.1.2 4 weeks ago Created mempool.embassy

2707deaf4597 docker.io/start9/electrs/main:0.10.5 4 weeks ago Created electrs.embassy

820d212871e5 docker.io/start9/free-gpt/main:2.208.0310 4 weeks ago Created free-gpt.embassy

2eb146c96859 docker.io/start9/x_system/utils:latest sleep infinity 2 weeks ago Up 2 weeks netdummy

start9@start:~$ sudo podman volume ls | wc -l

2039

start9@start:~$

I’m curious if you think I should prune the volumes (e.g. sudo podman volume prune).

I await your next suggestion.

StuPleb

November 12, 2024, 2:25pm

5

I’m not clear on what might have caused this… maybe installing and uninstalling Services in quick succession?

Does your StartOS diagnostic screen offer you a System Rebuild button?

jadagio

November 13, 2024, 10:13am

6

It does. I’ll try that and report back on the outcome.

1 Like

system

November 16, 2024, 1:50am

8

This topic was automatically closed 2 days after the last reply. New replies are no longer allowed.